CDH 部署方式有三种:

bin在线部署,需要访问外网rpm离线部署,但要下载相应的依赖包,不是真正的离线部署(还是需要访问外网或私服)tar离线部署,真正的CDH离线部署方式

CDH离线不是分为三部分:MySQL、CM、Parcel 文件;其中 MySQL 存储元数据,CM (cloudera-manager) 是大数据集群安装部署利器, Parcel 文件包含的大数据组件 (yarn、hdfs、zk、hive … ) 二进制包。

准备

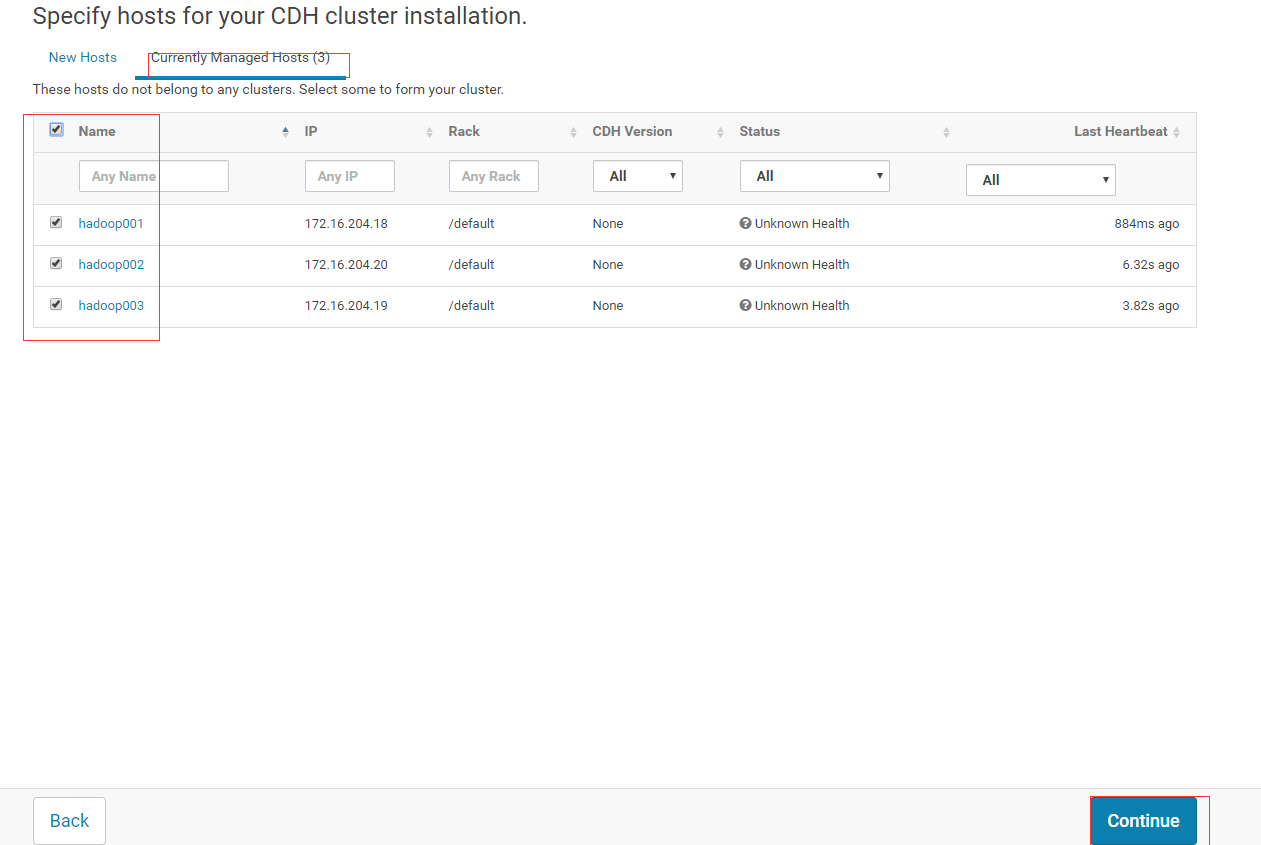

本节是在阿里云上进行部署,server = hadoop001,agent = hadoop001 hadoop2 hadoop3 。

阿里云

三台机子( 2核 8G),操作系统 CentOS 7.2

hosts

编辑 /etc/hosts 文件,注意 ip 写内网(只要不释放内网 ip 不变)

172.16.204.18 hadoop001

172.16.204.20 hadoop002

172.16.204.19 hadoop003

防火墙

本次操作是在云主机上,默认都是关闭的需要在 web 界面配置:实例 -> 更多 ->网络和安全组 -> 安全组配置->配置规则,在此界面配置出/入方向端口策略。

若在内网服务器,可在部署时先关闭防火墙,等部署后之后,再通过 CDHweb 界面将防火墙开启。操作命令如下

systemctl stop firewalld

systemctl disable firewalld

iptables -L // 查询防火墙规则

iptables -F // 删除防火墙规则

SELinux

关闭 SELinux

vi /etc/selinux/config

将 SELINUX=enforcing 改为 SELINUX=disabled

重启生效

时区和时钟同步

-

时区

-

执行

date查看当前时间并知道时间格式[root@hadoop002 ~]# date Wed Oct 9 17:17:26 CST 2019 // CST 中部标准时间 才是我们需要的,否则要修改 -

timedatectl命令可查看和设置时区[root@hadoop002 ~]# timedatectl Local time: Wed 2019-10-09 17:14:14 CST // 本地时间 Universal time: Wed 2019-10-09 09:14:14 UTC RTC time: Wed 2019-10-09 17:14:13 Time zone: Asia/Shanghai (CST, +0800) // 时区 NTP enabled: yes NTP synchronized: yes RTC in local TZ: yes DST active: n/a // 设置时区 [root@hadoop002 ~]# timedatectl set-timezone Asia/Shanghai

-

-

时钟同步

ntp实现机器之间的时间同步yum install -y ntp本次操作将

hadoop001当作主节点,hadoop002 -- 003当作从节点:主节点从网络拉取时间,从节点从主节点拉取时间-

主节点

[root@hadoop001 ~]# vi /etc/ntp.conf driftfile /var/lib/ntp/drift pidfile /var/run/ntpd.pid logfile /var/log/ntp.log # 添加以下内容 # time 网络获取 server 0.asia.pool.ntp.org server 1.asia.pool.ntp.org server 2.asia.pool.ntp.org server 3.asia.pool.ntp.org # 当外部时间不可⽤时,可使⽤本地硬件时间 server 127.127.1.0 iburst local clock # 允许哪些⽹段的机器来同步时间(当前阿里云的内网网段) restrict 172.16.204.0 mask 255.255.255.0 nomodify notrap ...[root@hadoop001 ~]# systemctl start ntpd [root@hadoop001 ~]# systemctl status ntpd ntpd.service - Network Time Service Loaded: loaded (/usr/lib/systemd/system/ntpd.service; enabled; vendor preset: disabled) Active: active (`running`) since Wed 2019-10-09 17:32:19 CST; 2h 37min ago Main PID: 18685 (ntpd) CGroup: /system.slice/ntpd.service 鈹斺攢18685 /usr/sbin/ntpd -u ntp:ntp -g Oct 09 17:32:19 hadoop001 systemd[1]: Starting Network Time Service... Oct 09 17:32:19 hadoop001 ntpd[18685]: proto: precision = 0.089 usec Oct 09 17:32:19 hadoop001 ntpd[18685]: 0.0.0.0 c01d 0d kern kernel time sync enabled Oct 09 17:32:19 hadoop001 systemd[1]: Started Network Time Service. [root@hadoop001 ~]# ntpq -p // 验证 remote refid st t when poll reach delay offset jitter ============================================================================== `LOCAL(0) .LOCL. 10 l 158m 64 0 0.000 0.000 0.000` -120.25.115.20 10.137.53.7 2 u 349 512 377 28.395 -8.285 0.960 ... -

从节点

// 禁用 ntpd 服务 [root@hadoop002 ~]# systemctl stop ntpd [root@hadoop002 ~]# systemctl disable ntpd Removed symlink /etc/systemd/system/multi-user.target.wants/ntpd.service.// 从 hadoop001 同步时间 [root@hadoop002 ~]# /usr/sbin/ntpdate hadoop001 9 Oct 20:33:40 ntpdate[18832]: adjust time server 172.16.204.18 offset 0.003356 sec// 每次都要手动执行同步时间太繁琐,在 crontab 定时 [root@hadoop003 ~]# crontab -e # 每小时同步时间 00 * * * * /usr/sbin/ntpdate hadoop001

-

JDK

jdk必须安装在/usr/java目录

[root@hadoop003 ~]# mkdir /usr/java

[root@hadoop003 ~]# tar -zxvf cdh5.16/jdk-8u45-linux-x64.gz -C /usr/java/

// 用户和用户组的修正

[root@hadoop003 ~]# chown -R root:root /usr/java/jdk1.8.0_45

// 配置 Java 环境变量

[root@hadoop001 ~]# vi /etc/profile

# env

export JAVA_HOME=/usr/java/jdk1.8.0_45

export PATH=$JAVA_HOME/bin:$PATH

[root@hadoop001 ~]# source /etc/profile

[root@hadoop001 ~]# which java

/usr/java/jdk1.8.0_45/bin/java

MySQL

MySQL安装目录/usr/local,并安装在hadoop001机器

-

安装

[root@hadoop001 cdh5.16]# tar -zxvf mysql-5.7.11-linux-glibc2.5-x86_64.tar.gz -C /usr/local [root@hadoop001 cdh5.16]# mv /usr/local/mysql-5.7.11-linux-glibc2.5-x86_64/ /usr/local/mysql [root@hadoop001 cdh5.16]# cd /usr/local/ [root@hadoop001 local]# mkdir mysql/arch mysql/data mysql/tmp -

my.cnf[root@hadoop001 cdh5.16]# vi /etc/my.cnf //首先 gg 移动到首行,然后输入 `:%d` 清除整个文件内容,最后将下面内容复制到文件 [client] port = 3306 socket = /usr/local/mysql/data/mysql.sock default-character-set=utf8mb4 [mysqld] port = 3306 socket = /usr/local/mysql/data/mysql.sock skip-slave-start skip-external-locking key_buffer_size = 256M sort_buffer_size = 2M read_buffer_size = 2M read_rnd_buffer_size = 4M query_cache_size= 32M max_allowed_packet = 16M myisam_sort_buffer_size=128M tmp_table_size=32M table_open_cache = 512 thread_cache_size = 8 wait_timeout = 86400 interactive_timeout = 86400 max_connections = 600 # Try number of CPU's*2 for thread_concurrency #thread_concurrency = 32 #isolation level and default engine default-storage-engine = INNODB transaction-isolation = READ-COMMITTED # 主从 id 不能重复 server-id = 1739 # 限定目录 basedir = /usr/local/mysql datadir = /usr/local/mysql/data pid-file = /usr/local/mysql/data/hostname.pid #open performance schema log-warnings sysdate-is-now binlog_format = ROW log_bin_trust_function_creators=1 log-error = /usr/local/mysql/data/hostname.err log-bin = /usr/local/mysql/arch/mysql-bin expire_logs_days = 7 innodb_write_io_threads=16 # 从机 relay-log 配置 relay-log = /usr/local/mysql/relay_log/relay-log relay-log-index = /usr/local/mysql/relay_log/relay-log.index relay_log_info_file= /usr/local/mysql/relay_log/relay-log.info log_slave_updates=1 gtid_mode=OFF enforce_gtid_consistency=OFF # slave slave-parallel-type=LOGICAL_CLOCK slave-parallel-workers=4 master_info_repository=TABLE relay_log_info_repository=TABLE relay_log_recovery=ON #other logs #general_log =1 #general_log_file = /usr/local/mysql/data/general_log.err #slow_query_log=1 #slow_query_log_file=/usr/local/mysql/data/slow_log.err #for replication slave sync_binlog = 500 # for innodb options # 限定目录 innodb_data_home_dir = /usr/local/mysql/data/ innodb_data_file_path = ibdata1:1G;ibdata2:1G:autoextend innodb_log_group_home_dir = /usr/local/mysql/arch innodb_log_files_in_group = 4 innodb_log_file_size = 1G innodb_log_buffer_size = 200M # 根据生产需要,调整pool size,当前机器是 8G 这里只写 2G,实际生产要比这大 innodb_buffer_pool_size = 2G # innodb_additional_mem_pool_size = 50M #deprecated in 5.6 tmpdir = /usr/local/mysql/tmp innodb_lock_wait_timeout = 1000 #innodb_thread_concurrency = 0 innodb_flush_log_at_trx_commit = 2 innodb_locks_unsafe_for_binlog=1 # innodb io features: add for mysql5.5.8 performance_schema innodb_read_io_threads=4 innodb-write-io-threads=4 innodb-io-capacity=200 # purge threads change default(0) to 1 for purge innodb_purge_threads=1 innodb_use_native_aio=on # case-sensitive file names and separate tablespace innodb_file_per_table = 1 lower_case_table_names=1 [mysqldump] quick max_allowed_packet = 128M [mysql] no-auto-rehash default-character-set=utf8mb4 [mysqlhotcopy] interactive-timeout [myisamchk] key_buffer_size = 256M sort_buffer_size = 256M read_buffer = 2M write_buffer = 2M -

创建用户组及用户[root@hadoop001 cdh5.16]# groupadd -g 101 dba [root@hadoop001 local]# useradd -u 514 -g dba -G root -d /usr/local/mysql mysqladmin useradd: warning: the home directory already exists. Not copying any file from skel directory into it. [root@hadoop001 local]# id mysqladmin uid=514(mysqladmin) gid=101(dba) groups=101(dba),0(root) -

环境变量# 拷贝环境变量到 mysqladmin home 目录,省得重头写 [root@hadoop001 local]# ls -l /etc/skel/.* -rw-r--r-- 1 root root 18 Dec 7 2016 /etc/skel/.bash_logout -rw-r--r-- 1 root root 193 Dec 7 2016 /etc/skel/.bash_profile -rw-r--r-- 1 root root 231 Dec 7 2016 /etc/skel/.bashrc [root@hadoop001 local]# cp /etc/skel/.* /usr/local/mysql# 配置环境变量 [root@hadoop001 local]# vi mysql/.bash_profile # .bash_profile # Get the aliases and functions if [ -f ~/.bashrc ]; then . ~/.bashrc fi # User specific environment and startup programs export MYSQL_BASE=/usr/local/mysql export PATH=${MYSQL_BASE}/bin:$PATH unset USERNAME #stty erase ^H set umask to 022 umask 022 PS1=`uname -n`":"'$USER'":"'$PWD'":>"; export PS1 -

赋权限

# 赋权限和用户组,切换用户mysqladmin [root@hadoop001 local]# chown mysqladmin:dba /etc/my.cnf [root@hadoop001 local]# chmod 640 /etc/my.cnf [root@hadoop001 local]# chown -R mysqladmin:dba /usr/local/mysql [root@hadoop001 local]# chmod -R 755 /usr/local/mysql -

配置服务及开机自启动

[root@hadoop001 local]# cd /usr/local/mysql # 将服务文件拷贝到init.d下,并重命名为mysql [root@hadoop001 mysql]# cp support-files/mysql.server /etc/rc.d/init.d/mysql # 赋予可执行权限 [root@hadoop001 mysql]# chmod +x /etc/rc.d/init.d/mysql # 删除服务 [root@hadoop001 mysql]# chkconfig --del mysql # 添加服务 [root@hadoop001 mysql]# chkconfig --add mysql # 自启动 [root@hadoop001 mysql]# chkconfig --level 345 mysql on [root@hadoop001 mysql]# chkconfig --list Note: This output shows SysV services only and does not include native systemd services. SysV configuration data might be overridden by native systemd configuration. If you want to list systemd services use 'systemctl list-unit-files'. To see services enabled on particular target use 'systemctl list-dependencies [target]'. aegis 0:off 1:off 2:on 3:on 4:on 5:on 6:off 'mysql 0:off 1:off 2:on 3:on 4:on 5:on 6:off' netconsole 0:off 1:off 2:off 3:off 4:off 5:off 6:off network 0:off 1:off 2:on 3:on 4:on 5:on 6:off # 等级0表示:表示关机 # 等级1表示:单用户模式 # 等级2表示:无网络连接的多用户命令行模式 # 等级3表示:有网络连接的多用户命令行模式 # 等级4表示:不可用 # 等级5表示:带图形界面的多用户模式 # 等级6表示:重新启动 -

安装

libaio及安装mysql的初始db[root@hadoop001 mysql]# yum -y install libaio [root@hadoop001 mysql]# su - mysqladmin Last login: Thu Oct 10 21:25:46 CST 2019 on pts/0 hadoop001:mysqladmin:/usr/local/mysql:>bin/mysqld \ > --defaults-file=/etc/my.cnf \ > --user=mysqladmin \ > --basedir=/usr/local/mysql/ \ > --datadir=/usr/local/mysql/data/ \ > --initialize # 在初始化时如果加上 –initial-insecure,则会创建空密码的 root@localhost 账号,否则会创建带密码的 root@localhost 账号,密码直接写在 log-error 日志文件中 #(在5.6版本中是放在 ~/.mysql_secret 文件里,更加隐蔽,不熟悉的话可能会无所适从)# 查看临时密码 hadoop001:mysqladmin:/usr/local/mysql:>cat data/hostname.err |grep password 2019-10-10T13:32:10.402764Z 1 [Note] A temporary password is generated for root@localhost: 'Yqouhpfkf2?%' -

启动

hadoop001:mysqladmin:/usr/local/mysql:>/usr/local/mysql/bin/mysqld_safe --defaults-file=/etc/my.cnf & [1] 2373 hadoop001:mysqladmin:/usr/local/mysql:>2019-10-10T13:39:18.600605Z mysqld_safe Logging to '/usr/local/mysql/data/hostname.err'. 2019-10-10T13:39:18.665593Z mysqld_safe Starting mysqld daemon with databases from /usr/local/mysql/data # 查看 MySQL 状态 hadoop001:mysqladmin:/usr/local/mysql:>exit logout [root@hadoop001 mysql]# ps -ef| grep mysql mysqlad+ 2373 1 0 21:39 pts/0 00:00:00 /bin/sh /usr/local/mysql/bin/mysqld_safe --defaults-file=/etc/my.cnf mysqlad+ 3190 2373 2 21:39 pts/0 00:00:00 /usr/local/mysql/bin/mysqld --defaults-file=/etc/my.cnf --basedir=/usr/local/mysql --datadir=/usr/local/mysql/data --plugin-dir=/usr/local/mysql/lib/plugin --log-error=/usr/local/mysql/data/hostname.err --pid-file=/usr/local/mysql/data/hostname.pid --socket=/usr/local/mysql/data/mysql.sock --port=3306 root 3219 2110 0 21:39 pts/0 00:00:00 grep --color=auto mysql [root@hadoop001 mysql]# netstat -nlp | grep 3190 tcp6 0 '0 :::3306' :::* LISTEN 3190/mysqld unix 2 [ ACC ] STREAM LISTENING 22291 3190/mysqld /usr/local/mysql/data/mysql.sock -

修改用户密码

[root@hadoop001 mysql]# su - mysqladmin Last login: Thu Oct 10 21:30:57 CST 2019 on pts/0 hadoop001:mysqladmin:/usr/local/mysql:>mysql -uroot -p'Yqouhpfkf2?%' # 此时需要先修改 root 密码才能进行后续操作 mysql> alter user root@localhost identified by '123456'; Query OK, 0 rows affected (0.00 sec) mysql> GRANT ALL PRIVILEGES ON *.* TO 'root'@'%' IDENTIFIED BY '123456' ; Query OK, 0 rows affected, 1 warning (0.00 sec) # 刷新权限 mysql> flush privileges; Query OK, 0 rows affected (0.02 sec) mysql> exit -

重启

hadoop001:mysqladmin:/usr/local/mysql:>service mysql restart Shutting down MySQL..2019-10-10T13:44:08.320218Z mysqld_safe mysqld from pid file /usr/local/mysql/data/hostname.pid ended [ OK ] Starting MySQL..[ OK ] hadoop001:mysqladmin:/usr/local/mysql:>mysql -uroot -p123456 mysql: [Warning] Using a password on the command line interface can be insecure. .....

创建 db 用户

-

cmf用户mysql> create database cmf DEFAULT CHARACTER SET utf8; Query OK, 1 row affected (0.00 sec) mysql> grant all on cmf.* TO 'cmf'@'%' IDENTIFIED BY '123456'; Query OK, 0 rows affected, 1 warning (0.00 sec) -

amon用户mysql> create database amon DEFAULT CHARACTER SET utf8; Query OK, 1 row affected (0.00 sec) mysql> grant all on amon.* TO 'amon'@'%' IDENTIFIED BY '123456'; Query OK, 0 rows affected, 1 warning (0.00 sec) # 刷新权限 mysql> flush privileges; Query OK, 0 rows affected (0.00 sec)

部署 jdbc jar

cmf、amon部署在哪个节点,那么此节点就需要部署mysql jdbc jar;本案例是在hadoop001

[root@hadoop001 mysql]# mkdir -p /usr/share/java

# 注意复制时去除版本号

[root@hadoop001 mysql]# cp /root/cdh5.16/mysql-connector-java-5.1.47.jar /usr/share/java/mysql-connector-java.jar

CM

默认目录

/opt/cloudera-manager

# 三台机器都要解压安装以及 agent 配置(hadoop001 - 003)

[root@hadoop003 ~]# mkdir /opt/cloudera-manager

[root@hadoop002 ~]# tar -zxvf /root/cdh5.16/cloudera-manager-centos7-cm5.16.1_x86_64.tar.gz -C /opt/cloudera-manager/

# 所有节点 agent 的配置,指向 server 的节点 hadoop001

[root@hadoop002 ~]# sed -i "s/server_host=localhost/server_host=hadoop001/g" /opt/cloudera-manager/cm-5.16.1/etc/cloudera-scm-agent/config.ini

# server 配置,在 hadoop001

[root@hadoop001 cloudera-scm-agent]# vi /opt/cloudera-manager/cm-5.16.1/etc/cloudera-scm-server/db.properties

com.cloudera.cmf.db.type=mysql

com.cloudera.cmf.db.host=hadoop001

com.cloudera.cmf.db.name=cmf

com.cloudera.cmf.db.user=cmf

com.cloudera.cmf.db.password=123456

com.cloudera.cmf.db.setupType=EXTERNAL

# 三台机子都要执行

# 创建 cloudera-scm 用户,--shell=/bin/false 指无需登陆,可在 /etc/passwd 修改

[root@hadoop002 ~]# useradd --system --home=/opt/cloudera-manager/cm-5.16.1/run/cloudera-scm-server/ --no-create-home --shell=/bin/false --comment "Cloudera SCM User" cloudera-scm

# 修改文件归属

[root@hadoop001 cloudera-scm-agent]# chown -R cloudera-scm:cloudera-scm /opt/cloudera-manager

Parcel

默认目录

/opt/cloudera/parcel-repo

# server 机器 hadoop001 上执行

[root@hadoop001 cloudera-scm-agent]# mkdir -p /opt/cloudera/parcel-repo

# 拷贝相关文件

[root@hadoop001 cloudera-scm-agent]# cp /root/cdh5.16/CDH-5.16.1-1.cdh5.16.1.p0.3-el7.parcel /opt/cloudera/parcel-repo/

# 注意目标文件后缀名是 sha,sha1 在部署过程 CM 会认为未下载成功继续下载

[root@hadoop001 cloudera-scm-agent]# cp /root/cdh5.16/CDH-5.16.1-1.cdh5.16.1.p0.3-el7.parcel.sha1 /opt/cloudera/parcel-repo/CDH-5.16.1-1.cdh5.16.1.p0.3-el7.parcel.sha

[root@hadoop001 cloudera-scm-agent]# cp /root/cdh5.16/manifest.json /opt/cloudera/parcel-repo/

# ⽬录修改⽤户及⽤户组

[root@hadoop001 cloudera-scm-agent]# chown -R cloudera-scm:cloudera-scm /opt/cloudera/

# 三台机器上执行

# 所有节点创建软件安装⽬录、⽤户及⽤户组权限(路径固定)

[root@hadoop001 cloudera-scm-agent]# mkdir -p /opt/cloudera/parcels

[root@hadoop001 cloudera-scm-agent]# chown -R cloudera-scm:cloudera-scm /opt/cloudera/

启动

server 启动

# hadoop001 执行

[root@hadoop001 init.d]# /opt/cloudera-manager/cm-5.16.1/etc/init.d/cloudera-scm-server start

# 看启动日志

[root@hadoop001 init.d]# tail -200f /opt/cloudera-manager/cm-5.16.1/log/cloudera-scm-server/cloudera-scm-server.log

# 等待一分钟后,显示如下表示启动成功

2019-10-10 22:50:39,733 INFO WebServerImpl:org.mortbay.log: Started SelectChannelConnector@0.0.0.0:7180

2019-10-10 22:50:39,733 INFO WebServerImpl:com.cloudera.server.cmf.WebServerImpl: Started Jetty server

# 此时需要hadoop001 开通 7180 端口就可以访问了

agent 启动

# hadoop001 - hadoop003

[root@hadoop001 init.d]# /opt/cloudera-manager/cm-5.16.1/etc/init.d/cloudera-scm-agent start

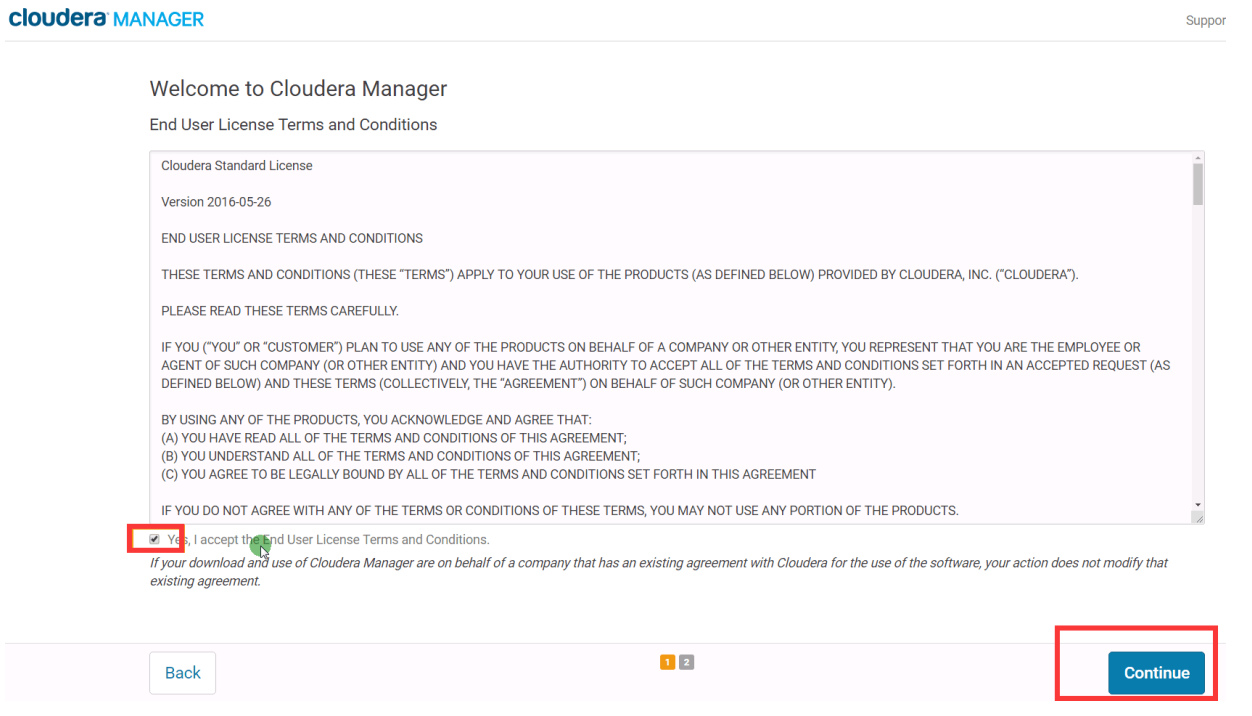

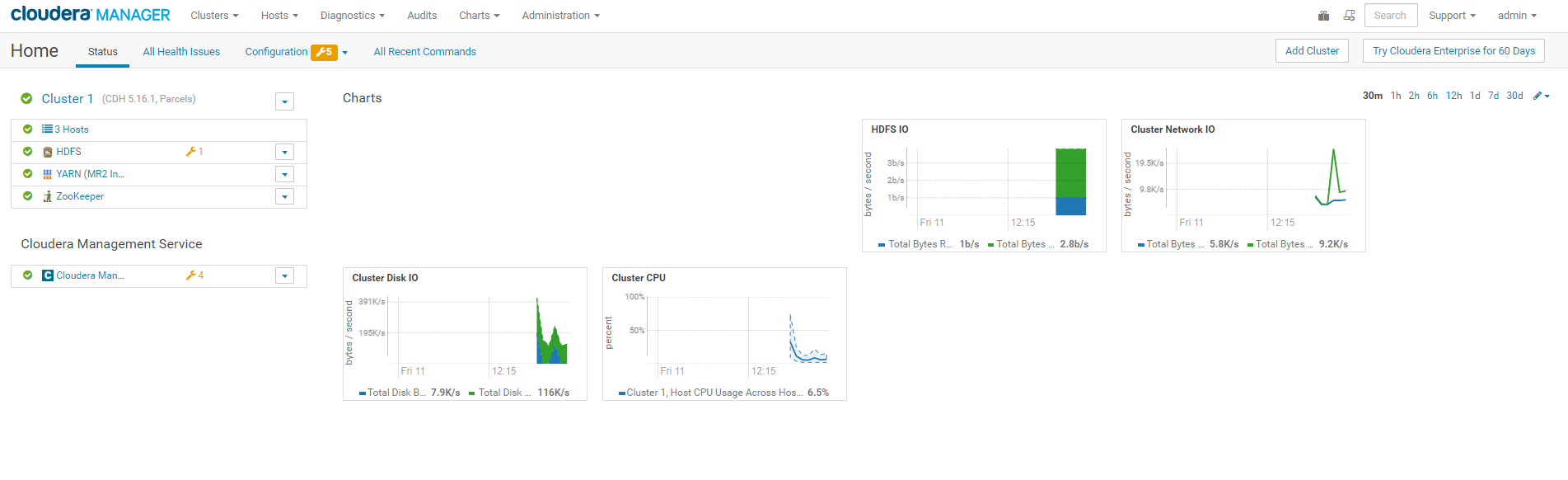

访问 hadoop001:7180,即可访问( 初始化账密 admin/admin )

Web 安装

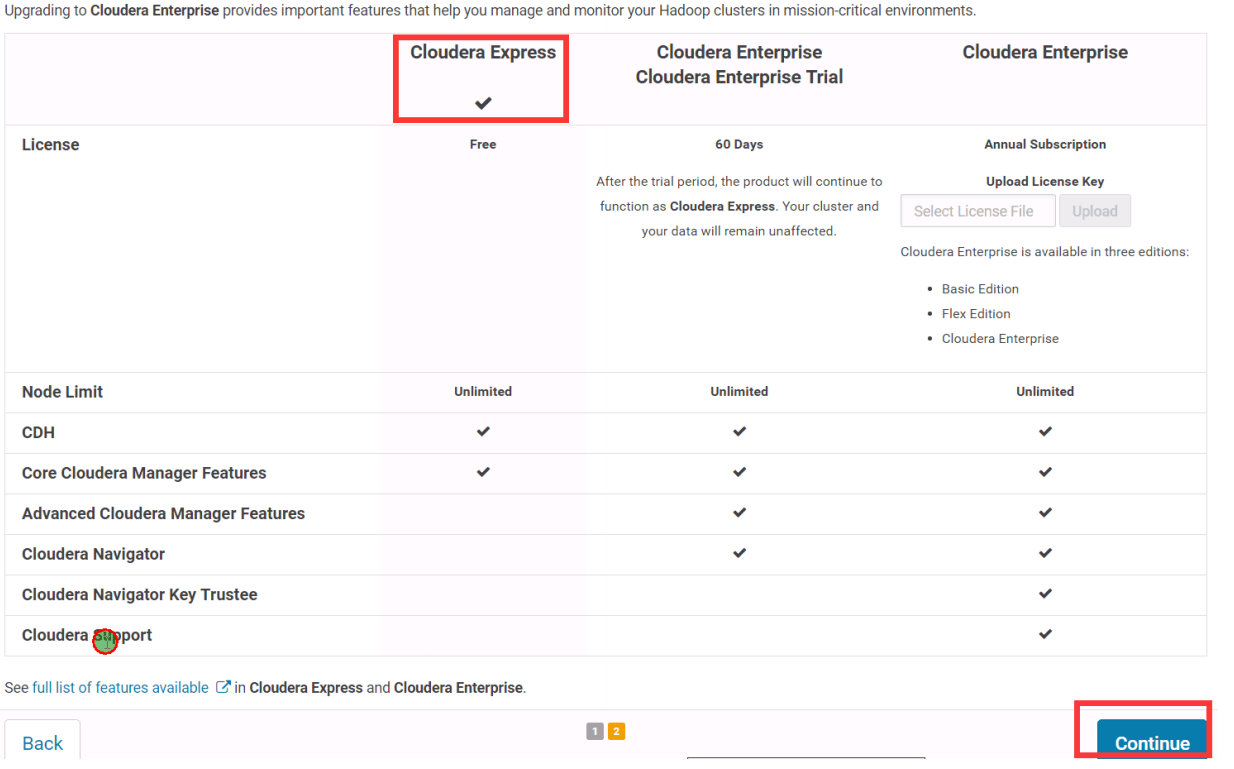

选择免费即可

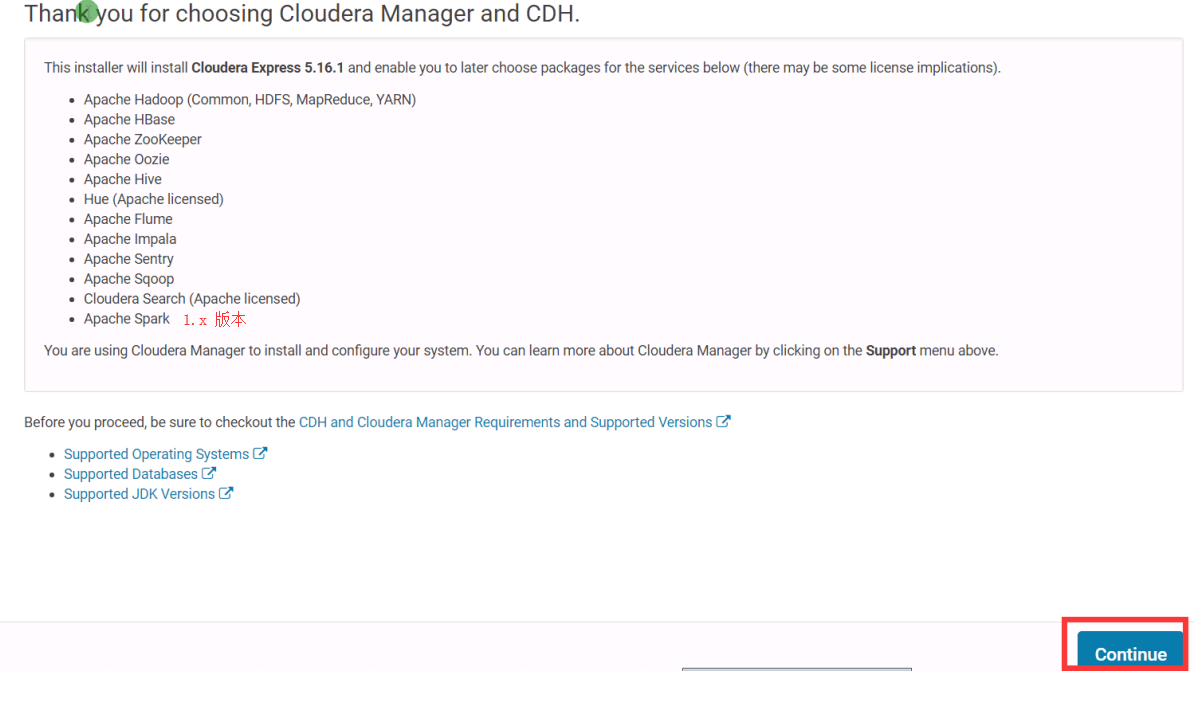

查看包含的大数据 组件

查看 agent 是否都在线

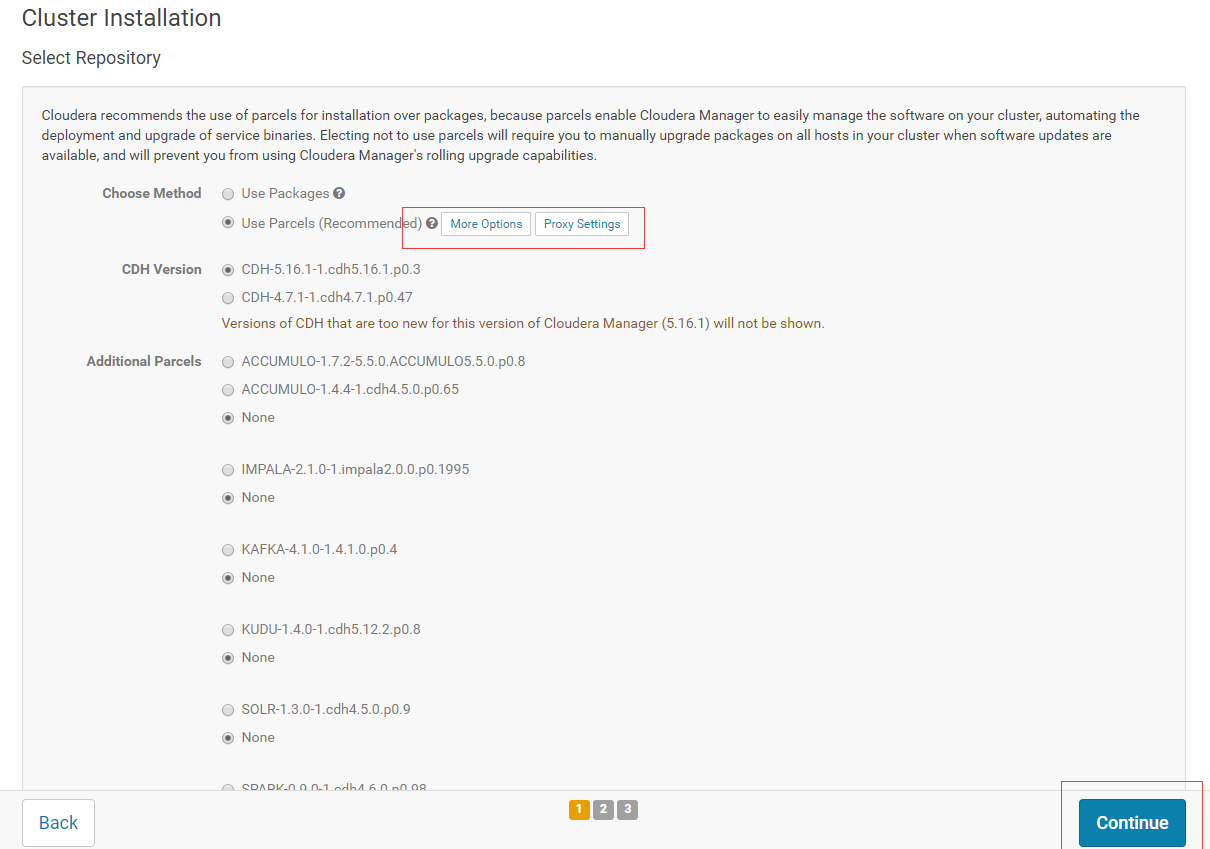

选择安装库,默认即可( 之前的目录都设置完毕)

集群安装 ,若本地 parcel 离线源配置正确 ,则”下载”阶段瞬间完成,其余阶段视节点数与内部⽹络情况决定 。

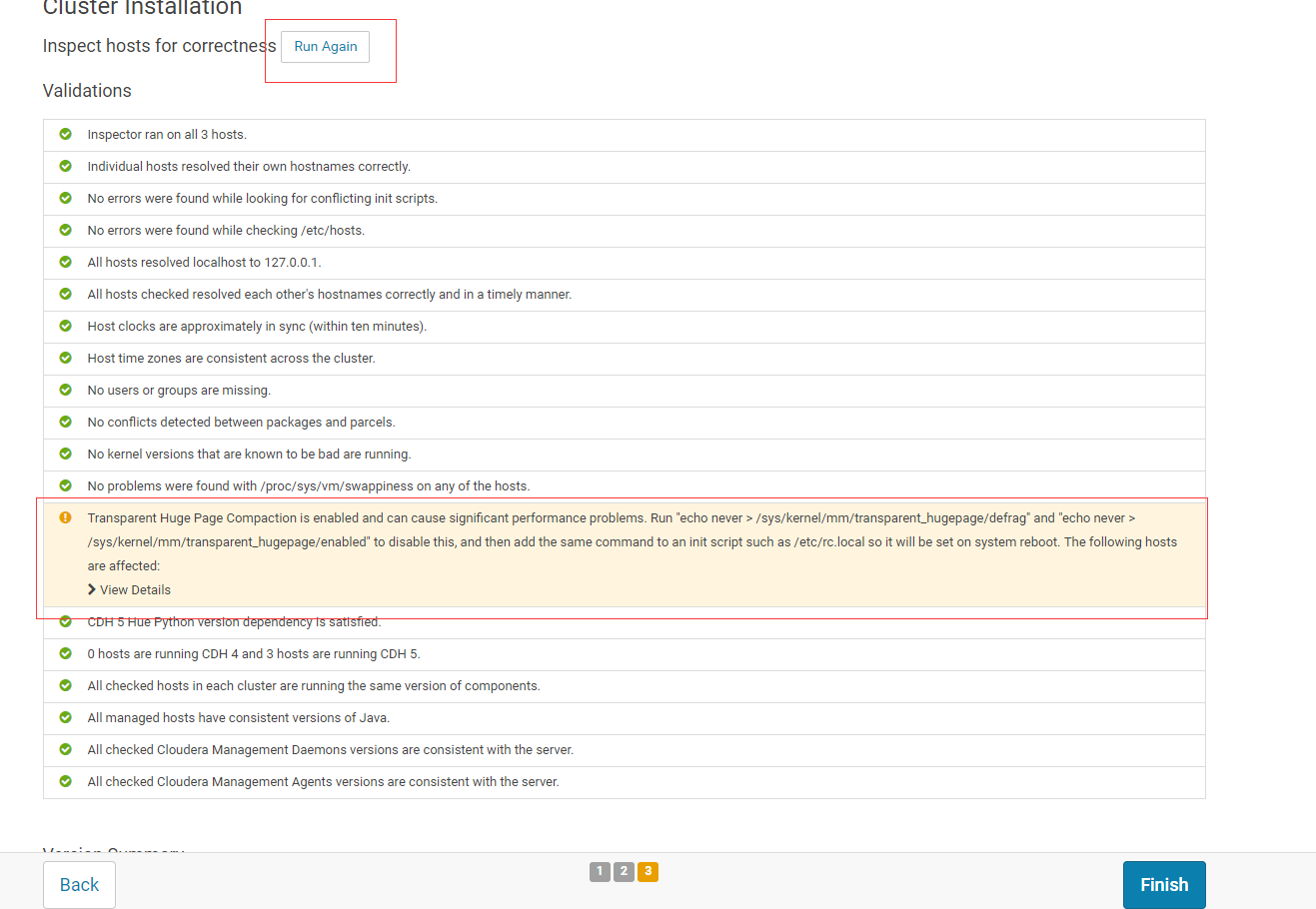

检查主机正确性

上面标红的地方,按照提示我们修改下

# 三台机制都要执行

[root@hadoop001 ~]# echo never > /sys/kernel/mm/transparent_hugepage/defrag

[root@hadoop001 ~]# echo never > /sys/kernel/mm/transparent_hugepage/enabled

然后再次点击

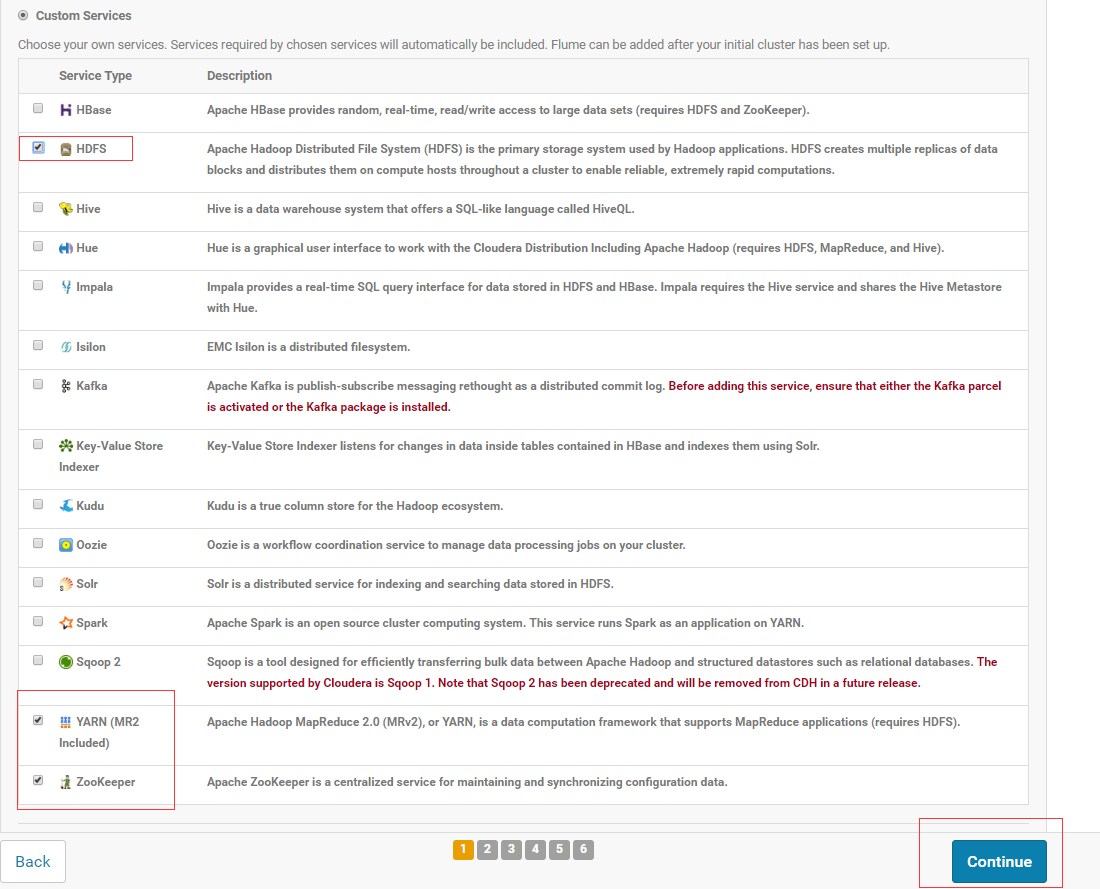

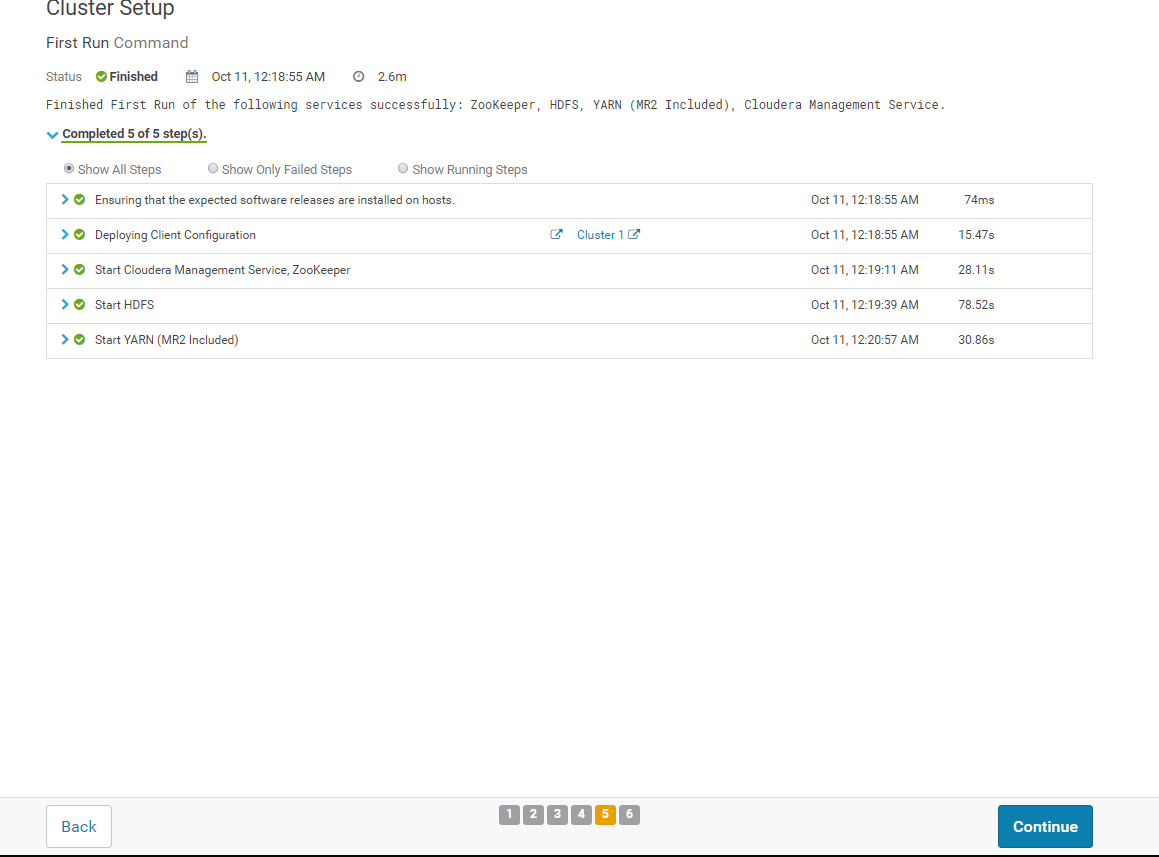

⾃定义服务,选择部署 Zookeeper、 HDFS、 Yarn 服务

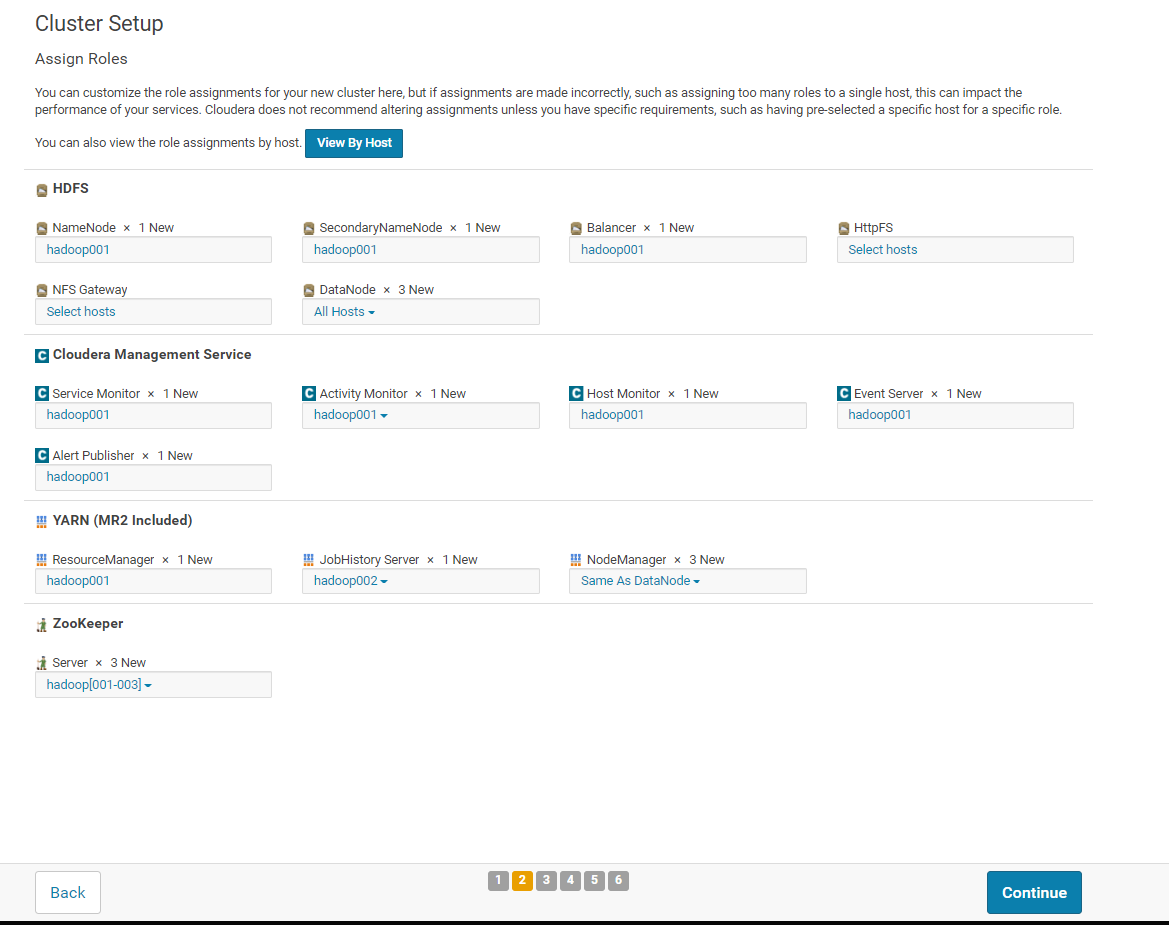

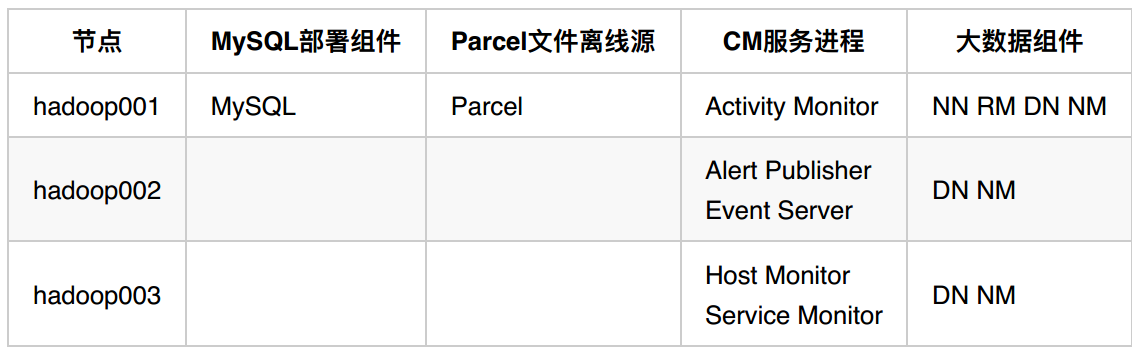

⾃定义⻆⾊分配 (每台机器运行的组件)

最终配置如下

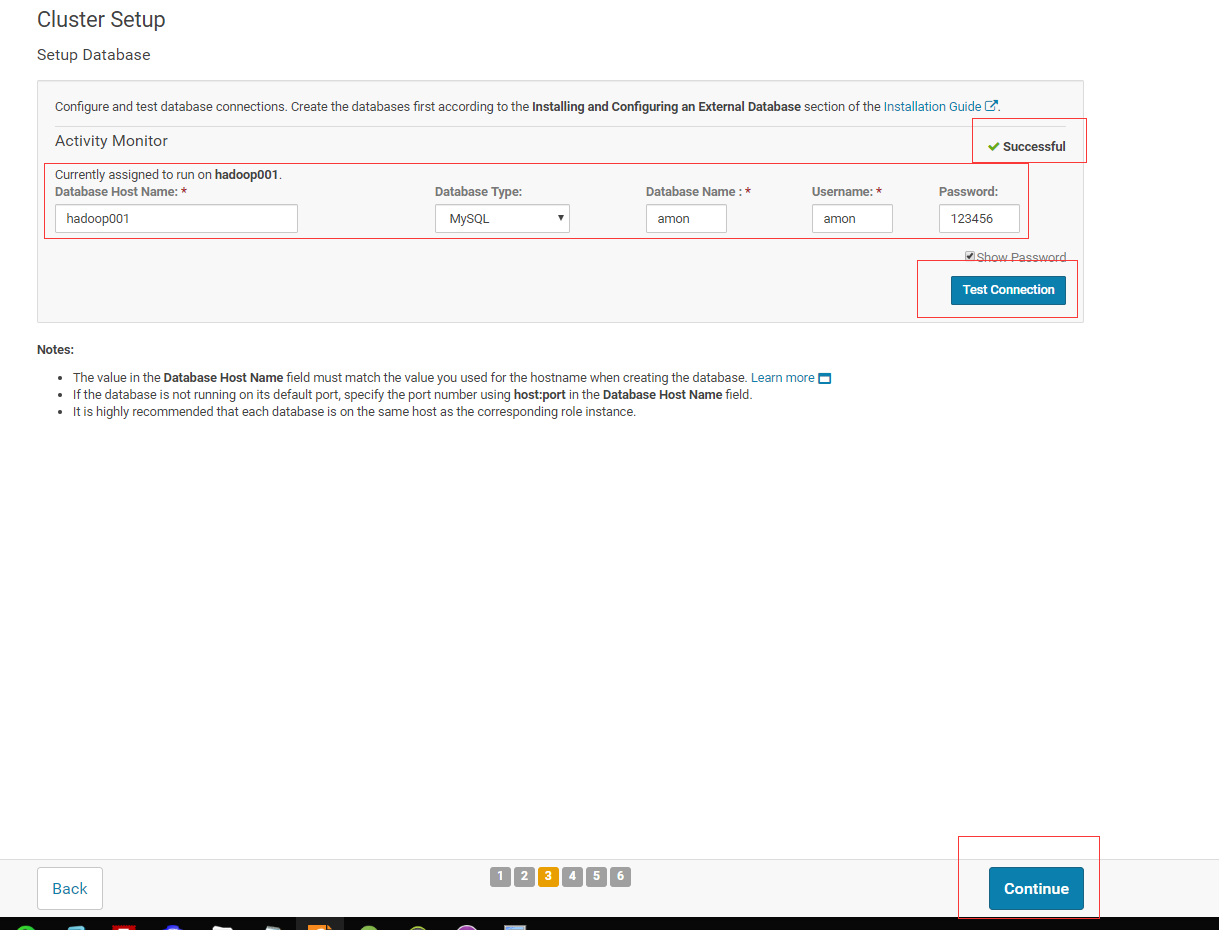

设置数据库

设置 amon ,点击

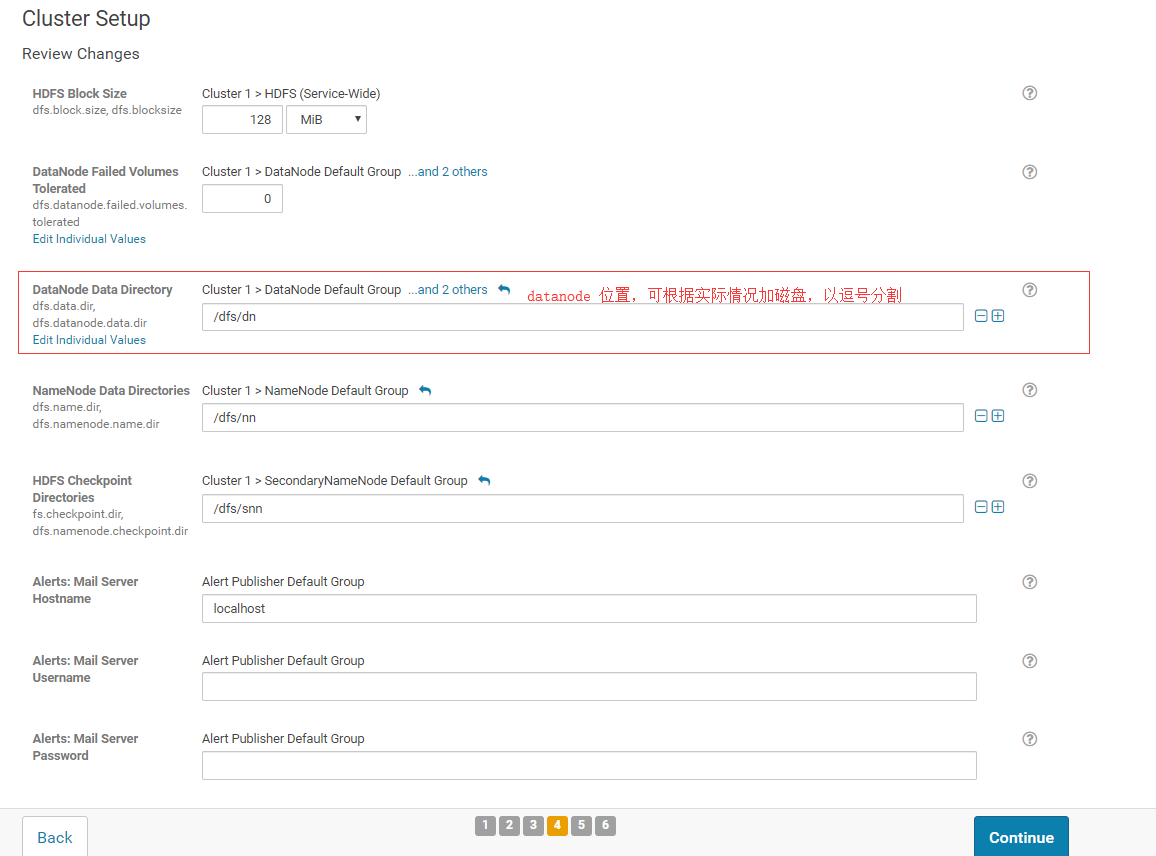

默认即可

错误排查

连接数据库出错

在 CDH Web 的 Setup Database 界面显示连接出错,可能存在以下几种原因

grant all on amon.* TO 'amon'@'%' IDENTIFIED BY '123456';没有指定%,表示任意ip都可访问amon赋予权限后,没有用flush privileges;指令刷新权限jdbc jar没有部署在hadoop001